ATLAS Open Data Analysis Service

Introduction

The ATLAS Open Data Analysis Service, developed by the

Elementary Particle Physics Working Group at Göttingen University

,

provides access to LHC–ATLAS Open Data, analysis tools, and computing resources. It enables high-school and university students,

educators, and physics enthusiasts to analyze real ATLAS data and explore advanced topics in High Energy and Particle Physics.

Beyond education, the service supports new teaching approaches and promotes Citizen Science. Tutorials use 13 TeV proton-proton

collision data and simulated samples for signal and background modeling. More details on data structure and content can be found

here.

EXPLORE: Motivation & Concept

While ATLAS Open Data is public, two barriers have limited wider use outside CERN:

- Technical barriers: analysis requires specialized HEP tools (e.g., ROOT) and more compute than typical online environments allow.

- Access barriers: some advanced tools require CERN credentials; expert-centric interfaces hinder new users.

EXPLORE removes these obstacles by providing:

- Technical solution: ready-to-use containerized environments with ROOT, libraries, and dependencies; batch execution on Göttingen’s

GoeGrid cluster for large-scale workloads.

- Access solution: no CERN account required; containers are preconfigured for remote data access so users can run analyses without local setup.

Outcome: Students, teachers, and independent researchers can perform real analyses without specialized hardware, institutional credentials, or complex configuration — turning open data from theory into practice.

Integration & Objectives

EXPLORE is part of PUNCH4NFDI, promoting FAIR data practices (Findable, Accessible, Interoperable, Reusable) across physics.

By repurposing the Tier-2 WLCG GoeGrid at the University of Göttingen as Open Analysis Resources, EXPLORE enables LHC–CERN

Open Data analysis without institutional affiliation.

Accessibility

Education & Outreach

Scalability

FAIR Principles

Sustainability

GoeGrid Göttingen Computing Resources

Why Use GoeGrid Computing Resources?

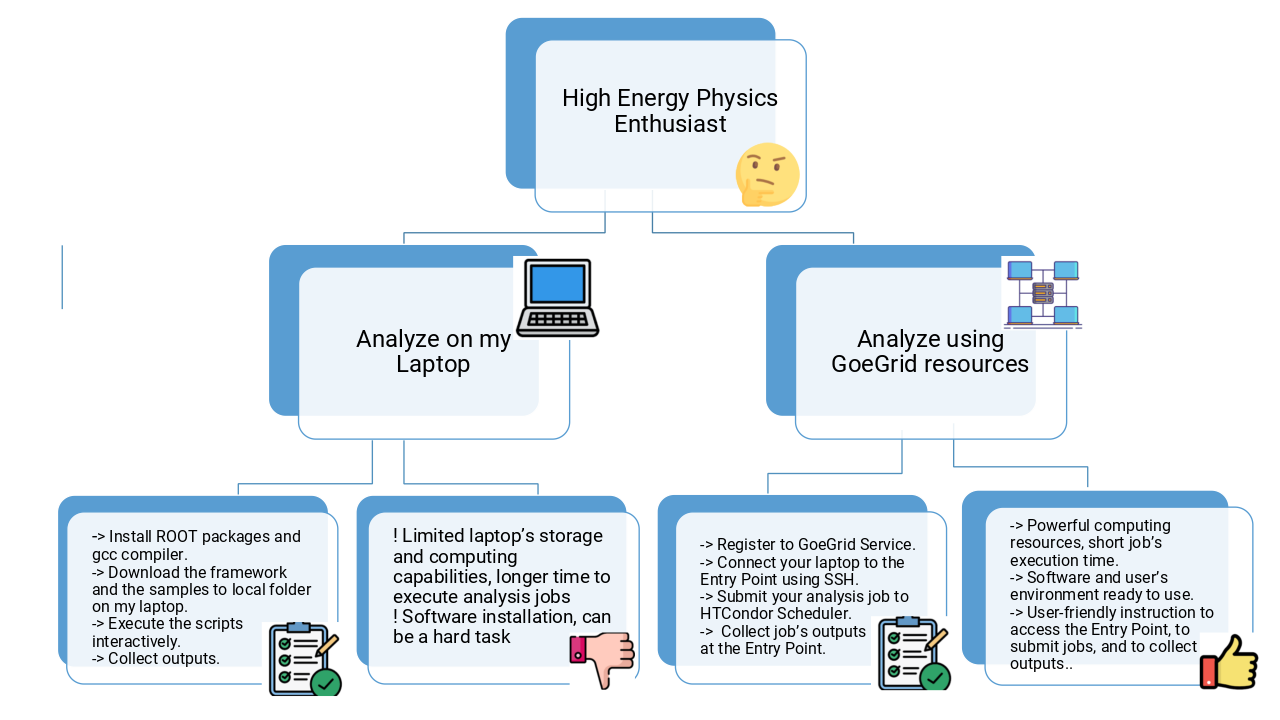

The diagram illustrates the advantage of using the GoeGrid computing cluster for ATLAS Open Data analysis over running it locally on a laptop with limited computing power.

Note: To register for access to GoeGrid, simply

Register Now

Infrastructure & Operation

EXPLORE turns GoeGrid into a scalable platform for FAIR Open Data analysis using batch systems, containers, and real-time monitoring.

Core Components

- HTCondor Overlay Batch System (OBS): aggregates distributed compute resources into a unified job pool with dynamic scheduling.

- Dynamic Resource Management (COBalD/TARDIS): scales resources automatically to match real-time workload demands.

Drone Architecture & Environments

- Drones: placeholder jobs that start an HTCondor

StartD and register as worker nodes, forming a seamless execution pool.

- Scheduling: the HTCondor Schedd queues, matches, and dispatches jobs across available drones for efficient execution.

- Reproducibility: version-controlled container setups with ROOT and dependencies are deployed at runtime.

Software Provisioning

- Apptainer Containers + CERN CVMFS: provide standardized analysis environments on all worker nodes.

- wlcg-wn container: jobs run inside pre-defined images delivered via CVMFS and initialized automatically by job configuration.

Monitoring

- Prometheus: collects metrics (CPU, memory, disk I/O, network, HTCondor job stats) from the submit/entry node.

- Grafana: visualizes system health and performance for proactive issue detection.

Registration & Access

- Fill the form (valid email) and submit your SSH public key.

- Receive confirmation and access instructions.

- SSH to the entry node:

ssh -i ~/.ssh/id_rsa <username>@punchlogin.goegrid.gwdg.de

Register Now

Understanding HTCondor: The High Throughput Computing System

After registering and obtaining a user account for GoeGrid, take a moment to familiarize yourself with HTCondor, the software framework enabling the execution of your analysis tasks.

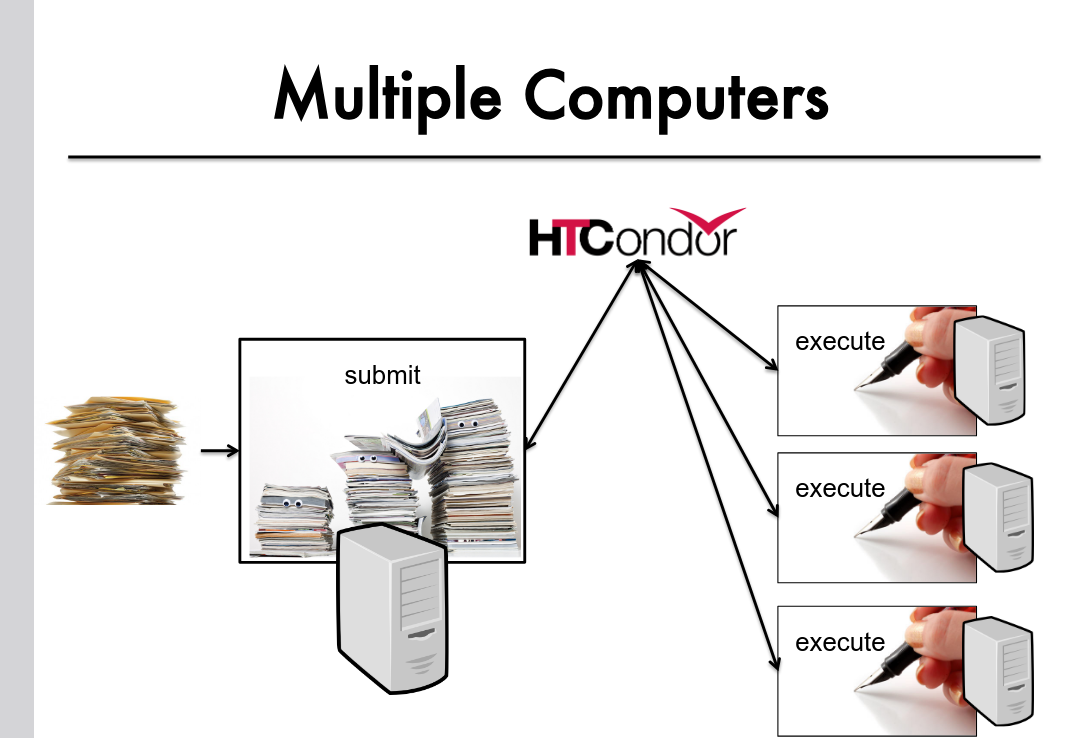

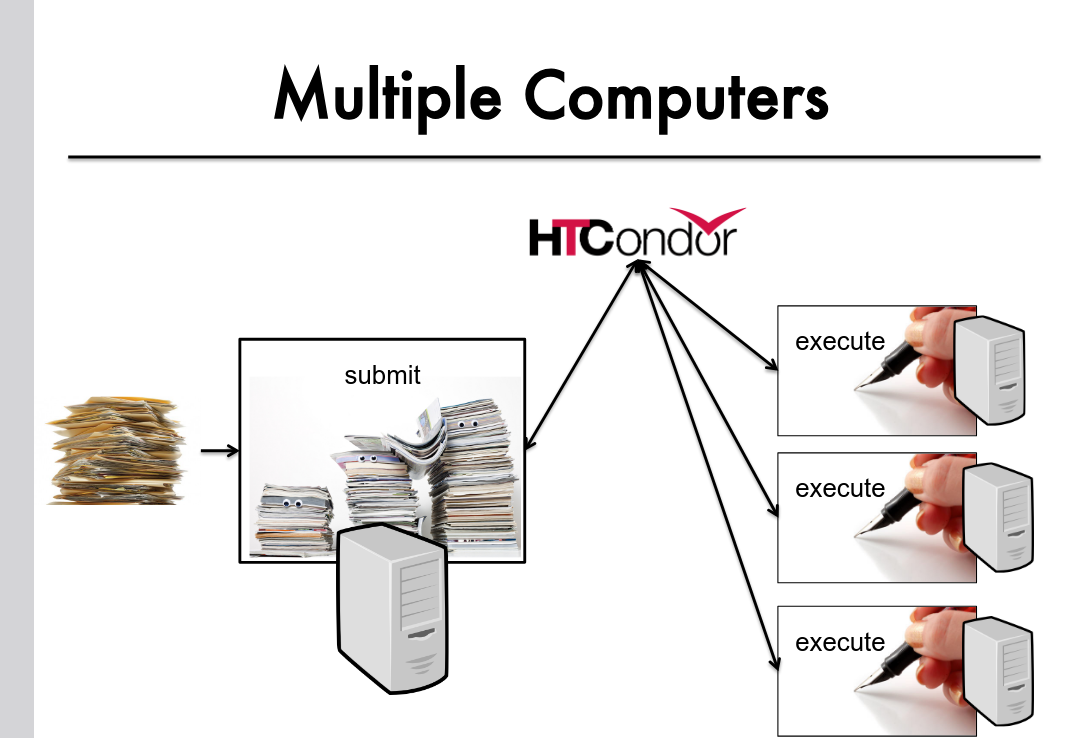

HTCondor, developed by the Center for High Throughput Computing at the University of Wisconsin–Madison, schedules and runs computing tasks across multiple computers.

When a user submits tasks to the HTCondor queue at the Submit/Entry Point, the system schedules and runs them on available Execute Nodes, managing tasks on the user's behalf.

To use HTCondor for executing your analysis, you must define the computing task, known as a “job.” A job consists of three main components:

- Input: The data you want to process.

- Executable Script: The program you wish to run.

- Output: The results of your computation.

These components are defined in a Job Description Language (JDL) file, which is submitted at the Submit Point.

The JDL file also specifies output, error, and log files to capture job information. You will find more details about creating and submitting a JDL file in the section below.

Analyzing with GoeGrid Resources

Learn more about HTCondor and how it manages your computing tasks by visiting the official

HTCondor website.

How Does HTCondor Manage Job Submission